The Relentless March of Computing Power

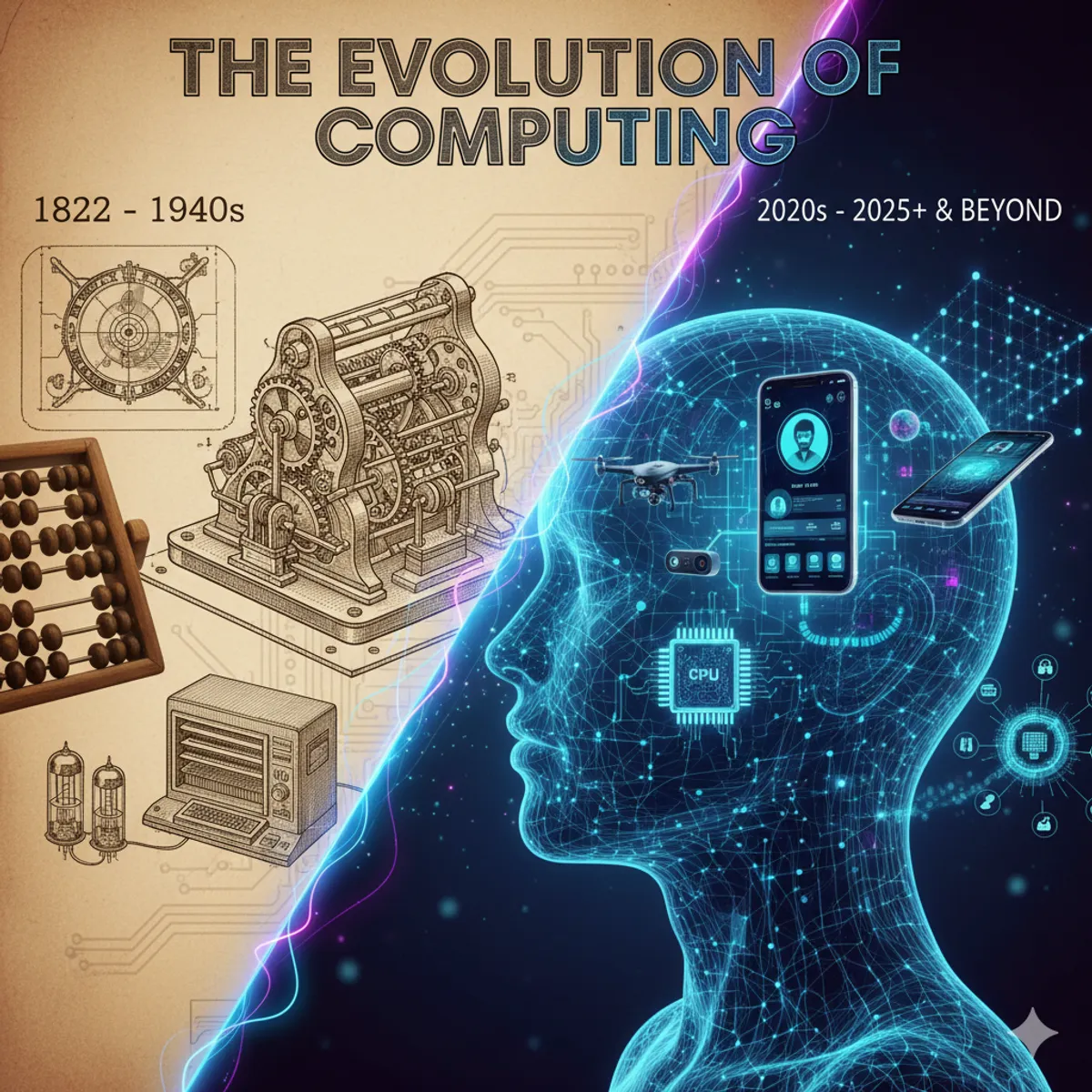

The journey of the computer is one of the most compelling stories of human ingenuity, marked by exponential growth in capability and a relentless shrinking in size. From room-sized behemoths to pocket-sized supercomputers, the evolution of computing has fundamentally reshaped every aspect of modern life.

The Dawn of the Digital Age: Early Computing (Pre-1950s)

Before electronic computers, mechanical devices like the Abacus, Napier’s Bones, and Pascaline helped with calculations. Charles Babbage’s Analytical Engine in the 19th century laid theoretical groundwork, but the true digital revolution began with the need for rapid calculations during wartime.

Definition (ENIAC: The First General-Purpose Electronic Digital Computer)

Unveiled in 1946, the Electronic Numerical Integrator and Computer (ENIAC) weighed 30 tons, occupied 1,800 square feet, and consumed 150 kW of power. It marked a pivotal shift from mechanical to electronic computation.

The Mainframe Era (1950s-1960s)

The first commercial computers, using vacuum tubes and later transistors, were expensive and required specialized operators. IBM dominated this era with its mainframes, primarily used by large corporations and governments for data processing.

The Rise of Personal Computing (1970s-1980s)

The invention of the microprocessor was a game-changer, making computers smaller and more affordable. This led to the emergence of personal computers (PCs), pioneered by companies like Apple and IBM, bringing computing power into homes and small businesses.

Tip (The Apple II and IBM PC)

The Apple II (1977) and the IBM Personal Computer (1981) were instrumental in democratizing computing, setting the stage for the software industry and widespread adoption.

The Internet Revolution and Mobile Computing (1990s-2010s)

The World Wide Web transformed computers from standalone tools into interconnected portals. Laptops became commonplace, and then smartphones and tablets ushered in the era of mobile computing, putting immense processing power in everyone’s pocket.

- 1990s: Dominance of desktop PCs, advent of the Internet and graphical user interfaces.

- 2000s: Proliferation of laptops, rise of social media, and early smartphones.

- 2010s: Smartphones become ubiquitous, tablet computers gain popularity, and cloud computing emerges as a major force.

The Present and Near Future (2020-2025): AI, Cloud, and Edge

Today, computing is characterized by interconnectedness, intelligence, and accessibility.

Explanation (Artificial Intelligence at the Core)

AI, once a niche academic pursuit, is now embedded in everything from search engines and recommendation systems to autonomous vehicles and medical diagnostics, driven by massive computational power.

- Cloud Computing: Continues to grow, offering scalable resources and services.

- Edge Computing: Processing data closer to its source, crucial for real-time AI applications and IoT.

- Artificial Intelligence (AI) & Machine Learning (ML): Driving advancements in data analysis, natural language processing, computer vision, and automation. Expect more personalized and predictive AI in daily applications.

- 5G Technology: Enhances connectivity, enabling faster data transfer and supporting more complex IoT ecosystems.

- Enhanced Cybersecurity: As digital reliance grows, so does the need for sophisticated security measures to protect data and infrastructure.

- Virtual and Augmented Reality (VR/AR): Increasingly integrated into professional and entertainment applications, demanding more powerful and efficient computing.

Beyond 2025: The Horizon of Computing

The future promises even more radical transformations:

Danger (Quantum Computing: A Paradigm Shift)

Still in its nascent stages, quantum computing has the potential to solve problems currently intractable for even the most powerful classical supercomputers, with profound implications for cryptography, materials science, and drug discovery.

- Quantum Computing: While not mainstream by 2025, significant progress in quantum research will continue, promising revolutionary computational power for specific, complex problems.

- Neuromorphic Computing: Inspired by the human brain, these chips aim for ultra-efficient AI processing.

- Ubiquitous and Invisible Computing: Computers will become even more integrated into our environment, often operating seamlessly in the background without direct user interaction.

- Sustainable Computing: Growing focus on energy efficiency and environmentally friendly hardware.

Conclusion

The journey of computing from cumbersome machines to intelligent, invisible systems is a testament to human innovation. As we approach 2025 and look beyond, the convergence of AI, quantum mechanics, and advanced connectivity promises to unlock unprecedented capabilities, further cementing computing as the driving force of progress in the 21st century.

Recommended for You

Explore more articles on similar topics and continue reading.